Over the past two years, conversations around artificial intelligence (AI) applications have primarily centered around data center computing. In its next iteration, AI traffic is likely to move to the edge. AI at the edge refers to the implementation of AI close to where the data is collected, such as a PC, auto, industrial equipment, mobile phone, or other consumer-driven device.

While conceptually AI at the edge makes sense, current state-of-the-art technology is not quite primed to support it. Complicating factors include high computational intensity, parallel computing structures, power loads, latency, and high bandwidth requirements for inference workloads. These factors are currently constricting growth rates, though Truist Securities estimates the market size to be $81 billion and growing at 10% CAGR.

Technical challenges aside, bringing AI to the edge will have numerous benefits for enterprises and consumers. Workloads would likely be far more efficient with faster response times in a mobile environment. Security and privacy are also thought to be considerably more protected in edge environments, as analytics can occur at a local level. The capability of working offline and potential cost improvements are also key benefits.

But what will enable AI applications to occur at the edge?

First, less costly, more efficient computing power, which seemed unlikely just a year ago, has begun to emerge. When chatGPT broke out, the mindset was “bigger is better” for large language models (LLMs). However, that bias has shifted towards smaller, more nimble models that are more cost-effective, task-specific, and optimal for edge-related computing.

In this blog, we’ll tap into firsthand perspectives found in our expert transcript library, discussing the major market’s edge that computing is primed to unlock, the potential for existing players to capitalize on, and views on future instances and use cases of generative AI (genAI) at the edge.

Understanding Edge AI Adoption

AI-driven applications have occurred mostly at the data center level in enterprise settings due to the size, power, and complexity of processing the model training data. Because of these technology hurdles, model training will likely stay at the data center. As AI has matured and the model inferencing market has grown, some of that data can now be processed at the local level.

Additionally, innovation behind 5G network infrastructure and wireless infrastructure has enabled some of that processing to also occur at the local level. Over time, technology improvements will enable edge AI at endpoints such as PCs, handheld devices, and automotive markets. This would allow consumers to experience AI applications and broaden the use cases for enterprise environments. Challenges that persist in the edge environment include processing power, memory, and storage relative to cloud-based environments.

Edge AI implements AI where the data is created versus in a cloud computing environment or an offsite data center. This could open up paths for new revenue and efficiency in various end markets, including the transportation, healthcare, agriculture, manufacturing, and retail industries. The ability to produce secure and real-time analytics at high speeds and lower costs is attractive to enterprises and consumers alike.

While all of this holds promise, hurdles remain for adoption, primarily computational intensity, parallel computing structures like GPUs, power, latency, and, in the case of mobile workloads, high-bandwidth memory, and connectivity. At a recent event, Apple announced its new iPad and unveiled the new M4 chip. The chip includes an advanced neural engine, stoking optimism around future mobile edge AI devices, as it reportedly addresses many of the roadblocks to broader consumer adoption.

The Outlook of AI at the Edge

At present, on-premise enterprise networks are critical for burgeoning AI applications. Networks are improving capacity and reducing latency to prepare for technological shifts, enabling last-mile delivery capabilities and infrastructure. Yet, true edge AI functionality is currently out of reach.

“For any edge deployments, it is a case where you have to deploy in the form of batch inferencing. Let’s say you have already trained a model and you have deployed it on-cloud. Your device is connected to the cloud. Edge device is connected to the cloud. For any inferencing requests like an end-user request, the device would give a call to the model deployed on the cloud. The model deployed on the cloud will give a response back to the device, and the end user will be able to see the output.”

– Former Manager, Pure Storage | Expert Transcript

Optimization at the edge is not only about smaller models. It’s about deploying smaller models that retain the level of performance occurring at the data center level. Techniques such as pruning and quantization are being utilized to accomplish size adjustments. In contrast, efficiency techniques such as low-rank adaption (LORA), federated learning, matrix decomposition, weight sharing, memory optimization, and knowledge distillation are all being utilized to optimize models for specific use cases at the edge.

Hybrid AI to Define the Near Future

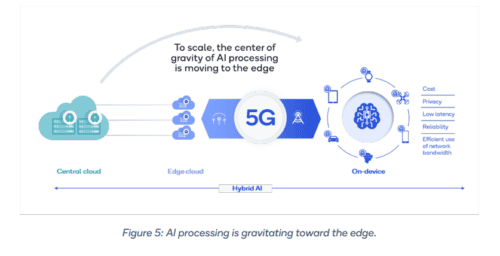

As genAI adoption grows and proliferates out to the edge, hybrid environments are more important than ever and are expected to define the next year or two. While computing has evolved to a mix of cloud and edge devices, AI processing will likely be distributed between the cloud and devices for AI to scale. Smartphones, cars, personal computers, and Internet of Things (IoT) devices will distribute workloads across both the cloud and on-device to ensure powerful, efficient, and optimal performance of AI applications.

“These trends of being at the edge, not worrying about predetermined capacity, easier deployment models, removing some of the decision-making around operating systems, firewalls, and other configuration, that’s all played into the growth of edge compute, and it’s a much simpler model than some of these models of deploying applications on AWS, where you might have 30 AWS products involved in a single application.”

– Customer of Cloudflare | Expert Transcript

The main motivation for adopting hybrid AI architectures is cost savings. While companies such as Google, Meta, and Microsoft have remained aggressive on AI capex plans, Apple’s approach has been more conservative and focused on the edge.

“Apple’s white paper releases like the recent one detailing ReALM (Reference Resolution As Language Modeling) have seemed to focus more on efficiency rather than the raw performance more common at companies like Microsoft and Google where the focus is on cloud-delivered AI. We think this supports our thesis that Apple sees edge AI as the key upside driver for its business.”

– Evercore ISI, May 2024 | Report

Massive generative AI models with billions of parameters put technological demands on infrastructure that will constrain growth potential. AI training and, even more so, inferencing will result in unsustainable costs for scale. Hybrid AI architectures might ease those constraints, benefiting cost, energy, performance, privacy, and personalization.

The evolution of genAI applications follows a similar pattern to technology innovations of the past. When introduced, new technologies are expensive and often bulky, but over time and with maturity, they achieve scale and cost reductions. GenAI is following a similar path and is on its way to becoming scalable both in the cloud and on-device in specific use cases.

The Future of Mobile Edge AI

Just as Google, Samsung, and Microsoft continue to push their efforts with generative AI on PCs and mobile devices, Apple is moving to join the party with OpenELM, a group of open-source LLMs and small language models (SLMs) that can run entirely on a single device rather than having to connect to cloud servers.

First, the introduction of OpenELM is a hint that Apple has potentially followed its historical playbook of bringing innovation into new markets in total secrecy. Second, the model itself is optimized for on-device use, meaning it can handle AI-powered tasks without reliance on cloud servers, signaling a new era in mobile AI innovation. AlphaSense experts see a gradual evolution in the mobile AI experience:

“What I probably will see in the beginning is that we’ll probably see more of it as a main device and not so much a third-party application. You’ll probably see Apple and Samsung utilize generative AI tools to enhance the user experience with their own native applications.

As time goes on and costs come down, you’ll be able to start seeing some larger of the application companies developing tools and so forth, or gains that will run on generative AI and then it’ll just continue to trickle down until it’s widely accessible to everyone.”

– Former Senior Director, Samsung | Expert Transcript

Similarly, Microsoft recently announced its Phi-3 Mini, expanding into SLM offerings. The model has been touted to be small enough to fit on a mobile phone, setting up another exciting development in genAI. AlphaSense experts believe it’s Apple’s game to lose when it comes to mobile AI applications:

“Time is really on the side of Apple customers and Apple because what are people going to do? They’re going to throw out their iPhone because Siri can’t do something super amazing? It will happen, but when? It’s a big question. Maybe 2025, 2026, but it has to come because they can feel the pressure of everybody, even those who are not in the field, they know about AI and they’re asking for more.”

– Former Senior Manager, Apple | Expert Transcript

Time will tell what Apple and others unveil, but according to the recent earnings report, the next word on Apple’s AI strategy and roadmap is expected to surface at the company’s Worldwide Developers Conference (WWDC) in June 2024.

We see generative AI as a very key opportunity across our products, and we believe that we have advantages that set us apart there. And we’ll be talking more about it in—as we go through the weeks ahead.”

– Apple CEO | Q2 ‘24 Earnings Report

With Apple and Microsoft introducing small language models, the companies join a growing list of hardware models appropriate for the mobile ecosystem. This matters as companies with less complex tasks, smaller budgets, or a reluctance to share data in the cloud may begin to move away from larger AI models. It’s possible that advanced reasoning will continue on larger models, whereas more practical use cases, such as data analysis, will shift to smaller models. What’s clear at this point is that big tech is strategically repositioning itself around on-device genAI capabilities, which will ultimately lead to a massive upgrade cycle.

Key Companies Shaping Edge AI

AI at the Edge is in its early stages of development. The following is a list of some of the key companies that are shaping the outlook for AI on the edge as major industry leaders or emerging players:

The Outlook For Edge AI

The outlook for edge AI is bright, and AlphaSense experts continue to weigh in on the dynamics. The success of AI at the edge not only relies on smaller and more efficient, less costly models but also on innovation around the performance of systems and devices. It will require optimizing models and the infrastructure those models will run on. Hybrid AI that is run in both the cloud and on-device is expected to represent the next iteration of computing.

We will watch the journey toward implementing AI at the edge and the companies succeeding in doing so with a first-party viewpoint via AlphaSense. As the industry continues to innovate and grow, AlphaSense will monitor emerging trends and competitive dynamics through our Expert Insights.

Ready to dive deep into the expert transcript library? Start your free trial of AlphaSense today.