Organizations amass inventories of valuable research (i.e., internal decks, memos, meeting notes, customer interviews, research notes), but struggle to leverage it within their work. This is due to factors like information silos in local drives, team folders, or access-dependent note-taking apps—spaces where internal research often remains inaccessible to the broader company.

There’s a massive cost to these information roadblocks, resulting in research blind spots, laborious data gathering, endless duplication of efforts across different teams, and a diminished ROI on research.

But even when the right information is available, the sheer amount of it makes it difficult for teams to find the right pieces of information, at the right time. The integration of generative AI (genAI) technologies into workflows is relieving some of these challenges. Consequently, knowledge workers are embracing this new technology’s ability to help them surface relevant insights instantly.

In our 2023 State of Gen AI & Market Intelligence report—which surveyed 500-plus professionals across various industries including Corporate Development, Corporate Strategy, Competitive Intelligence—a vast majority (over 80%) of respondents plan to leverage genAI tools in their research this coming year.

Learn the challenges that stem from research blind spots and how genAI specifically combats these everyday workplace obstacles.

The Cost of Inefficient Knowledge Sharing

There’s no underplaying the potential repercussions of poor organizational knowledge sharing. The tangible financial impact alone resulting from wasted time and suboptimal decision-making would make clear the severity of this issue to any C-Suite leader.

Take enterprise content for instance: it can become siloed, making it difficult to harness firmwide intellectual property. This silo effect is most often the result of firms who are acquisitive, but have not yet fully integrated all of the research and IP that sits within these assets.

According to assessments by Panopto and YOU.gov, major U.S. corporations suffer annual losses exceeding $40 million as a result of everyday operational inefficiencies directly linked to inadequate knowledge sharing. Additionally, Panopto’s research shared a concerning trend—new hires typically waste 200-plus hours on inefficient processes to familiarize themselves with their new roles. A lack of knowledge transferring and access to vital information is cited as the cause for this time expenditure.

To this extent, most organizations have, at some point, implemented Knowledge Management Systems (KMS) to combat these internal knowledge sharing issues. Despite best efforts, they are often met with outcomes that fall short of expectations. In many cases, even when you do know where to look for a specific piece of information, it can take multiple clicks in folders to access it and an over-reliance on CTRL+F for specific tidbits within a document.

GenAI Improves Discoverability of Internal Documents

Amongst organizations, a common industry obstacle or, moreso, need is omnipresent: to render internal research as discoverable and manageable as the external content being published in public marketplaces. And while the use cases for genAI seem endless and invaluable, C-Suite execs have taken particular note of the technology’s ability to eliminate internal content silos and improve the discoverability of internal research and documents.

The solution lies in leveraging genAI’s machine learning algorithms. By comprehensively and thoroughly understanding not only the content of each document but the context of each paragraph, sentence, and word used; genAI can begin to make sense of data in ways that we never thought possible. Traditional methods often struggle to provide efficient and accurate results, leading to time-consuming searches and frustrated employees. However, genAI addresses these challenges by harnessing the power of natural language processing and machine learning.

By understanding the context and semantics of queries, genAI refines search algorithms, delivering more relevant and precise results so that crucial internal documents are more easily found—and countless hours are not spent resharing information that already exists. This technology goes beyond keyword matching by considering the intent behind each search. Employees can now access critical information swiftly, boosting productivity and reducing the burden on IT support.

Furthermore, genAI continuously learns from user interactions, adapting its search capabilities to evolving organizational needs. Its ability to analyze vast datasets ensures that even obscure or complex queries yield meaningful results. This fosters a culture of knowledge sharing and collaboration, as employees feel empowered to explore and contribute to the wealth of internal documentation.

Selecting the Right GenAI Tool for Your Enterprise

Deciphering your use cases for genAI and LLMs should ultimately drive what type of model and overall product you’ll integrate into your workflows. If you have a large volume of lower complexity work (i.e., brainstorming, positioning statements, email copy generation, or other non-mission critical work), a general-purpose model may be a great fit.

As the need for a higher level of accuracy for mission critical workflows increases, and their error tolerance decreases, or where there is a higher security threshold for source content, more specialized models may better fit a client’s requirements.

Use cases that may require higher accuracy and data security include:

- Surfacing insights in pitch decks, investment memos, or other writeups that typically sit in a shared drive.

- Resurfacing work done on previous investments or deals, when you start a new engagement with a client or revisit a previously considered investment.

- Onboarding new hires without institutional knowledge, so they can digest internal historical knowledge of a deal or investment and what was done for it.

- Migrating insights and data when divisions or teams come together, to retain internal knowledge and avoid duplicative research.

The greatest impact is where an algorithm’s large language model has domain expertise, thus delivering higher precision and recall, improved information retrieval, less bias, computational efficiencies, and targeted knowledge extraction; all leading to an improved user experience.

An evaluation of model characteristics could include mapping your use case requirements against factors such as a model’s factual consistency, coherence or fluency, granularity of detail, or redundancy in output responses.

Restricting access to certain documents is common to any firm and must be supported within any enterprise intelligence solution. The concept of document entitlement must flow through to genAI summarization and Chat experiences so that any user has a means of accessing content sets they are otherwise not permitted to—a feature often overlooked by vendors.

What an LLM Model Needs to Include

The non-negotiable aspects of a model include:

- Model training, including content sourcing for training purposes

- Document processing (ex, normalization, company name recognition, topic extraction, etc.), and permissioning capabilities

- Broader semantic search capabilities, relevancy algorithms, sentiment analysis and topic or theme or KPI identification—all of which require domain expertise to do well

- Scalability features including user management, broad system connectors, infrastructure compatibility, and collaboration or workflow tools

- Front-end interfaces and productivity tools cannot be underestimated when considering the broader change management that may be required

All of these play fundamental roles in how usable and scalable system solutions will be, and require distinct consideration and scoping.

LLMs for GenAI: Should You Build or Buy?

Even though a slew of new genAI tools have entered the market, organizations are grappling with a fundamental decision: whether to procure an off-the-shelf solution or embark on the journey of crafting a bespoke solution from the ground up. This strategic dilemma has become a focal point for AlphaSense’s CTOs and IT teams, who engage in robust discussions with us to determine the best path forward.

For CTOs considering the build or buy question, building LLMs takes significant time, resources, and capital in order to effectively generate output. From acquiring, cleaning, and curating large data sets for training, to computational resources (hardware, software, cloud services), ramping/hiring personnel, as well as maintenance and updates, the list is seemingly endless.

While companies realize the potential positive impacts genAI can have on their business, implementing it alone can be challenging. But considering external solutions leads to questions about data security and data control, flexibility and customization, reliability and scalability, implementation times, and implementation costs.

See how AlphaSense compares with the top enterprise search platforms in our comprehensive Enterprise Search Software 2024 Buyer’s Guide.

AlphaSense’s Enterprise Intelligence

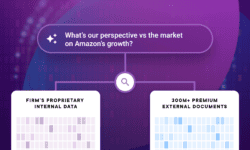

At AlphaSense, we understand the importance of having a product that delivers the results and promises the security every enterprise requires today. Our newest solution, Enterprise Intelligence, boasts a secure end-to-end encryption to secure your information and streamlines the ability to search, instantly verify summarizations and ask questions across both your internal research documents and premium external market intelligence sources (filtering them by company name recognition, topic identification and extraction sentiment analysis, and synonym recognition).

By leveraging multiple concurrent data connections, across the full spectrum of diverse content management systems, AlphaSense offers a unified content set and federated search experience that fully indexes and scores documents for easy retrieval via our Search, Dashboards, Alerts, and a natural language chat experience.

Oftentimes, historical analyses are well captured but not easily leveraged. This is where our clients are benefiting from the ability to not only interrogate, but also search and discover insights from summarizations of that historical data set leveraging the full AlphaSense suite of solutions.

Interrogating this content has been equated to asking questions to a team member who has been part of every deal, meeting note, deck, and investment recommendation. And when clients layer these features on their proprietary knowledge base, they start creating more tailored results and summarizations that seamlessly blend internal research with relevant external perspectives.

AlphaSense Keeps Your Internal Data Secure

As you explore layering technology on your valuable internal content and IP, security needs to be front and center. To that end, AlphaSense is committed to the security and privacy of your data.

Our platform leverages an end-to-end encryption of customer information, a zero-trust security model, flexible deployments, and robust entitlement awareness. Further, our secure cloud environment complies with global security standards–SOC2, ISO27001 compliant, conducts regular, accredited third-party penetration testing. Additionally, we offer FIPS 140-2 standard encryption on all content, as well as SAML 2.0 integration to support user authentication.

And when it comes to our proprietary genAI technology, all queries and data fed into and generated by smart summarizations remain within the platform, with no third parties involved. In addition to being available as an AlphaSense-managed solution in our secure cloud, Enterprise Intelligence can also be deployed via a customer-managed private cloud. This may appeal to customers who need to comply with more stringent internal information security policies or controls.

Start a conversation today to explore how AlphaSense’s Enterprise Intelligence can improve the search and discovery of insights across your internal content.

Don’t miss our State of Generative AI & Market Intelligence Report 2023.